Introduction: Seeing with WiFi signals

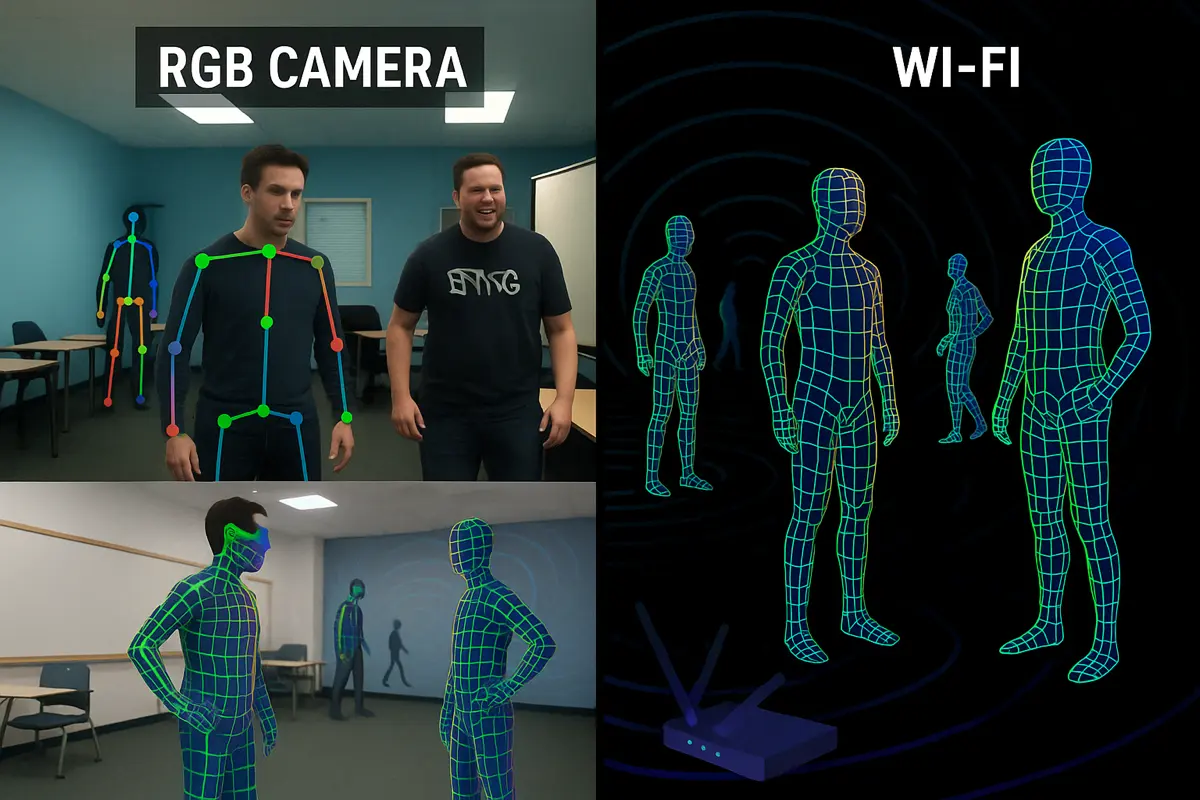

Imagine if your home’s WiFi router could “see” you moving around, without any cameras. Recent advances in AI are turning this into reality. Researchers have discovered how to use ordinary WiFi signals – the same radio waves that carry internet to your phone – to reconstruct a detailed 3D pose of the human body. By analyzing subtle changes in the amplitude (signal strength) and phase (wave timing) of WiFi radio waves, a deep neural network can map people’s movements in space, similar to how a camera-based system might, but without any optical camera at all. This approach is not only surprisingly accurate, it also works in the dark or through walls, offering a privacy-friendly and low-cost alternative to cameras.

Why use WiFi to sense people? Unlike cameras, radio waves aren’t blocked by poor lighting or most obstacles – they can pass through walls and furniture, meaning a WiFi-based “vision” system can track people even when a camera would see nothing. And because WiFi does not capture detailed visual appearance, it is inherently more private – no actual video or image of the person is recorded, just abstract signal data. Most households already have WiFi, so this technology could be deployed without expensive new hardware. In contrast, traditional 3D sensing systems like LiDAR (laser scanners) or mmWave radars require specialized, costly devices. A single decent LiDAR can cost hundreds of dollars and consume significant power, whereas WiFi transceivers are cheap and ubiquitous. Camera-based vision, for its part, struggles with dark environments, glare, or occlusions (when something blocks the view). And of course, few people want cameras monitoring them in private spaces like living rooms or bathrooms. As the researchers put it, WiFi signals “protect individuals’ privacy and the required equipment can be bought at a reasonable price”. In other words, WiFi has the potential to be a ubiquitous, unobtrusive sensor for human activity.

To put this in perspective, scientists have been experimenting with WiFi sensing for years. Back in 2013, a team at MIT showed they could detect the mere presence of a person through walls using WiFi signal reflections, essentially creating a rudimentary stick-figure of a moving person by tracking where the radio waves were disturbed. Since then, others have used WiFi for coarse tasks like detecting breathing, tracking a person’s location, or identifying basic gestures. In 2019, one project (dubbed “Person-in-WiFi”) even detected 2D body joints and body outlines via WiFi, hinting at the rich information latent in these signals. The new breakthrough, however, takes it to a whole new level: full-body dense pose estimation using only WiFi. In essence, the AI is filling in those stick-figures with a detailed human mesh, outputting an estimate of the position of every part of the body, not just key joints. The result is comparable to computer vision systems that use regular cameras – except here the “camera” is a set of WiFi antennas and the invisible waves that envelop us.

Before diving into how it works, let’s clarify two key concepts: amplitude and phase. These are properties of any radio wave (including WiFi) that together encapsulate the wave’s behavior. Amplitude is the strength or intensity of the signal (imagine the height of an ocean wave), while phase is the wave’s relative position in its cycle (imagine two ocean waves offset from each other – the offset is like the phase difference). When WiFi signals propagate in a room, they bounce off objects and people, and multiple antennas pick them up like SONAR. By comparing the phase of signals arriving at different antennas, one can infer distance or angle – similar to how our ears detect a sound’s direction by the tiny timing differences between left and right. Channel State Information (CSI) is the technical term for the rich data that WiFi devices can measure: it’s essentially a breakdown of the signal’s amplitude and phase across a bunch of sub-frequencies (subcarriers) and antenna pairs, giving a detailed fingerprint of the radio environment. The beauty of CSI is that it encodes how the environment (and people in it) affect the signal. The challenge is that CSI is messy – raw phase measurements can be inconsistent due to timing offsets, and noise or interference (from microwaves, phones, etc.) can muddle the data. That’s where deep learning comes in: the researchers developed a neural network pipeline to translate these WiFi signals into a 3D human pose. In the following sections, we’ll explore how this system compares to other sensing methods, and then unpack the fascinating way it works – from radio waves to human poses.

WiFi vs. Cameras, Radar, and LiDAR: A New Angle on Vision

It might sound incredible that WiFi could do the job of a camera or a laser scanner. Each technology “sees” the world differently, so let’s compare:

- Optical Cameras: They capture rich detail (textures, colors) but falter when lighting is poor or something is in the way. Cameras have a limited field of view and raise obvious privacy concerns – you know when you’re on camera, and many folks are uncomfortable with cameras at home. They’re also compute-intensive: extracting 3D pose from a 2D image is a hard vision problem.

- LiDAR: These sensors shoot out laser beams and measure precise distances to build a 3D point cloud of the environment. They can be very accurate for shapes and distances and work in darkness (since they supply their own laser light), but they’re expensive and power-hungry for continuous use. You’re not likely to scatter $500 laser units around your living room. Plus, while LiDAR preserves anonymity better than a camera, people might still balk at spinning lasers in their home.

- Radar (mmWave): Similar to WiFi in that it uses radio waves, radar systems (like those in some cars or Google’s Soli sensor) emit specialized signals and can detect motion and some shape information. They can also penetrate obstacles to some degree and work in all lighting. However, high-resolution radars for imaging are still relatively costly and often require expertise to deploy. They also typically have lower resolution than cameras or LiDAR – a radar might tell you something’s moving and roughly where, but not yield a detailed pose without complex algorithms.

WiFi sensing sits at a sweet spot between these. Standard WiFi routers operate on frequencies (~2.4 GHz or 5 GHz) that can pass through drywall and furniture, giving them a wider reach in indoor spaces. They also use multiple antennas and multiple frequencies (subcarriers), which effectively provides a crude “3D vision” via radio: differences in signal at the antennas can be used to infer spatial information. Importantly, WiFi is already everywhere – the hardware (routers, laptops) can often be repurposed for sensing with just a software update. For example, Linksys recently introduced a commercial service using mesh WiFi nodes as motion detectors (no cameras needed) by analyzing signal disturbances. The research we’re discussing goes further – not just detecting motion, but reconstructing the configuration of human bodies moving in the space.

Another big advantage is privacy. WiFi signals don’t reveal identity or facial features – in fact, the output of the system is a cartoon-like figure (a dense pose) rather than a photo. As one tech outlet quipped, “No cameras needed” for WiFi motion sensing. This could make people more comfortable with sensors in private spaces, since there’s no lens watching, no video being recorded. Of course, one can argue there are still privacy implications (it could track your movements room-to-room), but it’s certainly less invasive than a camera feed. The Carnegie Mellon researchers explicitly highlight that their WiFi-based pose estimator is privacy-preserving, illumination-invariant, and robust to occlusion – properties that address many shortcomings of traditional sensors.

In summary, WiFi-based vision won’t replace your smartphone camera for taking vacation photos – but for the task of sensing human position and movement in a space, it offers a compelling blend of ubiquity, cost-effectiveness, and performance in challenging conditions. Now, let’s unpack how one actually converts raw WiFi signals into a meaningful pose estimation. The process involves some clever signal processing and deep learning – effectively turning CSI data into an AI’s “eyes.”

From Signals to Silhouettes: How “DensePose from WiFi” Works

The core of this system is an AI model that maps WiFi radio data to a human body model. It’s aptly named DensePose-from-WiFi, after Facebook’s DensePose (a vision model that maps image pixels to the 3D surface of a human body). The WiFi approach produces a similar output – a dense correspondence of body surface points – but using radio waves as input. Here’s a step-by-step look at how the researchers achieved this:

1. WiFi CSI Data Collection & Setup

To teach an AI to see from WiFi, you first need a dataset of WiFi signals paired with “ground truth” human poses. The researchers used a setup with 3 transmitters and 3 receivers, arranged roughly at opposite sides of a room (think of two WiFi routers at opposite ends of a space, each with 3 antennas). In practice, they leveraged an existing dataset called Person-in-WiFi, which provided synchronized WiFi CSI streams and video recordings of people performing activities. Each transmitter and receiver have multiple antennas, yielding a 3×3 MIMO configuration (3 transmit × 3 receive antennas). The WiFi signals used the 802.11n WiFi standard at 2.4 GHz, and they recorded the CSI at 100 samples per second, while a camera captured video at 20 FPS for reference. In total, data was gathered in 16 different room layouts (various positions of routers, furniture, etc.) with 1–5 people in the scene at a time. This diversity was important for the model to learn to handle clutter and multiple subjects.

Each CSI sample from the WiFi card consists of a set of complex numbers for each antenna pair and subcarrier. The researchers focus on 30 subcarrier frequencies (spanning the WiFi channel) and the 3×3 antenna pairs. So one sample gives two 3×3 matrices: one for amplitude and one for phase (or equivalently, a single 3×3 matrix of complex values). To incorporate some temporal information (movement dynamics), they stack 5 consecutive CSI samples as the input to the neural network. This results in an input tensor of size 5×30×3×3 for amplitude and the same for phase – in other words, two tensors of shape 150×3×3 (amplitude, phase).

However, raw phase data from WiFi is notoriously fickle. Due to hardware quirks and the way WiFi protocols work, the phase of CSI can include random shifts each packet, causing discontinuities that have nothing to do with human motion. Signal sanitization is therefore a crucial preprocessing step.

2. Signal Sanitization – Cleaning Up Phase Information

To make the phase data usable, the team applied a series of signal processing fixes:

- Phase Unwrapping: Raw phase values jump between –π and π (–180° to 180°). If a person moves such that the phase exceeds this range, it “wraps around” abruptly. The researchers unwrap the phase over subcarriers to turn those jumps into smooth changes. Essentially, if they detect a sudden jump (e.g. from 179° to –179°), they add or subtract 2π to reconnect the curve. This yields continuous phase curves (no sudden 360° flips).